In recent years, Google DeepMind has emerged as a leading force in the field of artificial intelligence research. With groundbreaking advancements in areas such as machine learning and neural networks, DeepMind has continually pushed the boundaries of what is possible in the realm of AI. One area of particular interest is the development of analogical and step-back prompting techniques. These innovative approaches aim to enhance the learning capabilities of AI systems by drawing connections to previous knowledge and encouraging a deeper understanding of the problem at hand. In this article, we will delve into the recent advancements made by Google DeepMind in the field of analogical and step-back prompting, exploring their potential implications and the exciting possibilities they bring for the future of artificial intelligence.

- Mastering AI Art: A Concise Guide to Midjourney and Prompt Engineering

- Exploring OpenAI’s ChatGPT Code Interpreter: A Deep Dive into its Capabilities

- A Closer Look at OpenAI’s DALL-E 3

- How to Create a ChatGPT Persona for Business Strategy

- ChatGPT & Advanced Prompt Engineering: Driving the AI Evolution

Introduction

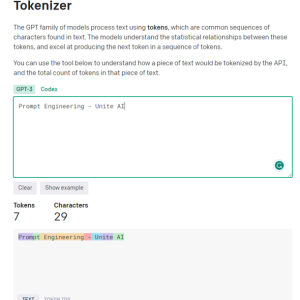

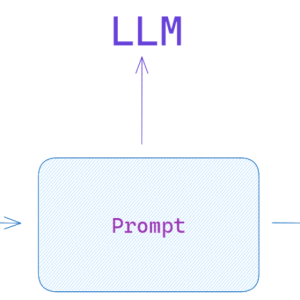

Prompt engineering focuses on devising effective prompts to guide Large Language Models (LLMs) such as GPT-4 in generating desired responses. A well-crafted prompt can be the difference between a vague or inaccurate answer and a precise, insightful one.

You are viewing: Analogical & Step-Back Prompting: A Dive into Recent Advancements by Google DeepMind

In the broader ecosystem of AI, prompt engineering is one of several methods used to extract more accurate and contextually relevant information from language models. Others include techniques like few-shot learning, where the model is given a few examples to help it understand the task, and fine-tuning, where the model is further trained on a smaller dataset to specialize its responses.

Google DeepMind has recently published two papers that delve into prompt engineering and its potential to enhance responses on multiple situations.

These papers are a part of the ongoing exploration in the AI community to refine and optimize how we communicate with language models, and they provide fresh insights into structuring prompts for better query handling and database interaction.

This article delves into the details of these research papers, elucidating the concepts, methodologies, and implications of the proposed techniques, making it accessible even to readers with limited knowledge in AI and NLP.

Paper 1: Large Language Models as Analogical Reasoners

The first paper, titled “Large Language Models as Analogical Reasoners,” introduces a new prompting approach named Analogical Prompting. The authors, Michihiro Yasunaga, Xinyun Chen and others, draw inspiration from analogical reasoning—a cognitive process where humans leverage past experiences to tackle new problems.

Key Concepts and Methodology

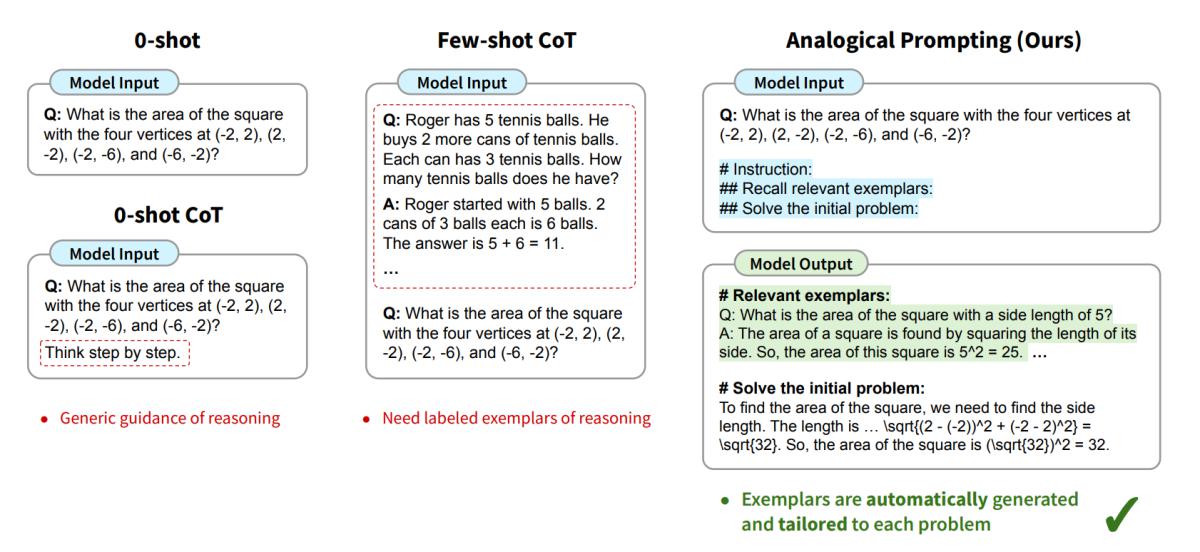

Analogical Prompting encourages LLMs to self-generate relevant exemplars or knowledge in context before proceeding to solve a given problem. This approach eliminates the need for labeled exemplars, offering generality and convenience, and adapts the generated exemplars to each specific problem, ensuring adaptability.

Left: Traditional methods of prompting LLMs rely on generic inputs (0-shot CoT) or necessitate labeled examples (few-shot CoT). Right: The novel approach prompts LLMs to self-create relevant examples prior to problem-solving, removing the need for labeling while customizing examples to each

Self-Generated Exemplars

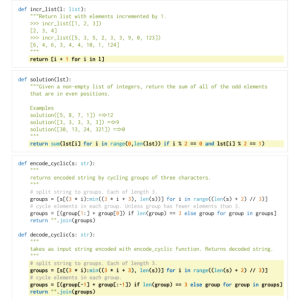

The first technique presented in the paper is self-generated exemplars. The idea is to leverage the extensive knowledge that LLMs have acquired during their training to help them solve new problems. The process involves augmenting a target problem with instructions that prompt the model to recall or generate relevant problems and solutions.

For instance, given a problem, the model is instructed to recall three distinct and relevant problems, describe them, and explain their solutions. This process is designed to be carried out in a single pass, allowing the LLM to generate relevant examples and solve the initial problem seamlessly. The use of ‘#’ symbols in the prompts helps in structuring the response, making it more organized and easier for the model to follow.

Key technical decisions highlighted in the paper include the emphasis on generating relevant and diverse exemplars, the adoption of a single-pass approach for greater convenience, and the finding that generating three to five exemplars yields the best results.

Self-Generated Knowledge + Exemplars

The second technique, self-generated knowledge + exemplars, is introduced to address challenges in more complex tasks, such as code generation. In these scenarios, LLMs might overly rely on low-level exemplars and struggle to generalize when solving the target problems. To mitigate this, the authors propose enhancing the prompt with an additional instruction that encourages the model to identify core concepts in the problem and provide a tutorial or high-level takeaway.

One critical consideration is the order in which knowledge and exemplars are generated. The authors found that generating knowledge before exemplars leads to better results, as it helps the LLM to focus on the fundamental problem-solving approaches rather than just surface-level similarities.

Advantages and Applications

See more : Prompt Hacking and Misuse of LLMs

The analogical prompting approach offers several advantages. It provides detailed exemplars of reasoning without the need for manual labeling, addressing challenges associated with 0-shot and few-shot chain-of-thought (CoT) methods. Additionally, the generated exemplars are tailored to individual problems, offering more relevant guidance than traditional few-shot CoT, which uses fixed exemplars.

The paper demonstrates the effectiveness of this approach across various reasoning tasks, including math problem-solving, code generation, and other reasoning tasks in BIG-Bench.

The below tables present performance metrics of various prompting methods across different model architectures. Notably, the “Self-generated Exemplars” method consistently outshines other methods in terms of accuracy. In GSM8K accuracy, this method achieves the highest performance on the PaLM2 model at 81.7%. Similarly, for MATH accuracy, it tops the chart on GPT3.5-turbo at 37.3%.

Performance on mathematical tasks, GSM8K and MATH

In the second table, for models GPT3.5-turbo-16k and GPT4, “Self-generated Knowledge + Exemplars” shows best performance.

Performance on Codeforces code generation task

Paper 2: Take a Step Back: Evoking Reasoning via Abstraction in Large Language Models

Overview

The second paper, “Take a Step Back: Evoking Reasoning via Abstraction in Large Language Models” presents Step-Back Prompting, a technique that encourages LLMs to abstract high-level concepts and first principles from detailed instances. The authors, Huaixiu Steven Zheng, Swaroop Mishra, and others aim to improve the reasoning abilities of LLMs by guiding them to follow a correct reasoning path towards the solution.

Depicting STEP-BACK PROMPTING through two phases of Abstraction and Reasoning, steered by key concepts and principles.

Let’s create a simpler example using a basic math question to demonstrate the “Stepback Question” technique:

Original Question: If a train travels at a speed of 60 km/h and covers a distance of 120 km, how long will it take?

Options:

3 hours2 hours1 hour4 hoursOriginal Answer [Incorrect]: The correct answer is 1).

See more : 10 Best ChatGPT Prompts for HR Professionals

Stepback Question: What is the basic formula to calculate time given speed and distance?

Principles:To calculate time, we use the formula:Time = Distance / Speed

Final Answer:Using the formula, Time = 120 km / 60 km/h = 2 hours.The correct answer is 2) 2 hours.

Although LLMs nowadays can easily answer the above question, this example is just to demonstrate how the stepback technique would work. For more challenging scenarios, the same technique can be applied to dissect and address the problem systematically. Below is a more complex case demonstrated in the paper:

STEP-BACK PROMPTING on MMLU-Chemistry dataset

Key Concepts and Methodology

The essence of Step-Back Prompting lies in its ability to make LLMs take a metaphorical step back, encouraging them to look at the bigger picture rather than getting lost in the details. This is achieved through a series of carefully crafted prompts that guide the LLMs to abstract information, derive high-level concepts, and apply these concepts to solve the given problem.

The process begins with the LLM being prompted to abstract details from the given instances, encouraging it to focus on the underlying concepts and principles. This step is crucial as it sets the stage for the LLM to approach the problem from a more informed and principled perspective.

Once the high-level concepts are derived, they are used to guide the LLM through the reasoning steps towards the solution. This guidance ensures that the LLM stays on the right track, following a logical and coherent path that is grounded in the abstracted concepts and principles.

The authors conduct a series of experiments to validate the effectiveness of Step-Back Prompting, using PaLM-2L models across a range of challenging reasoning-intensive tasks. These tasks include STEM problems, Knowledge QA, and Multi-Hop Reasoning, providing a comprehensive testbed for evaluating the technique.

Substantial Improvements Across Tasks

The results are impressive, with Step-Back Prompting leading to substantial performance gains across all tasks. For instance, the technique improves PaLM-2L performance on MMLU Physics and Chemistry by 7% and 11%, respectively. Similarly, it boosts performance on TimeQA by 27% and on MuSiQue by 7%.

Performance of STEP-BACK PROMPTING vs CoT

These results underscore the potential of Step-Back Prompting to significantly enhance the reasoning abilities of LLMs.

Conclusion

Both papers from Google DeepMind present innovative approaches to prompt engineering, aiming to enhance the reasoning capabilities of large language models. Analogical Prompting leverages the concept of analogical reasoning, encouraging models to generate their own examples and knowledge, leading to more adaptable and efficient problem-solving. On the other hand, Step-Back Prompting focuses on abstraction, guiding models to derive high-level concepts and principles, which in turn, improve their reasoning abilities.

These research papers provide valuable insights and methodologies that can be applied across various domains, leading to more intelligent and capable language models. As we continue to explore and understand the intricacies of prompt engineering, these approaches serve as crucial stepping stones towards achieving more advanced and sophisticated AI systems.

That concludes the article: Analogical & Step-Back Prompting: A Dive into Recent Advancements by Google DeepMind

I hope this article has provided you with valuable knowledge. If you find it useful, feel free to leave a comment and recommend our website!

Click here to read other interesting articles: AI

Source: wubeedu.com

#Analogical #StepBack #Prompting #Dive #Advancements #Google #DeepMind

Source: https://wubeedu.com

Category: Prompt Engineering